The Next Three Things It's Best to Do For Deepseek Success

페이지 정보

본문

While DeepSeek is currently free to make use of and ChatGPT does provide a free plan, API access comes with a value. DeepSeek's aggressive efficiency at relatively minimal price has been acknowledged as potentially challenging the global dominance of American AI fashions. Leading figures within the American AI sector had combined reactions to DeepSeek's success and efficiency. 4. Model-primarily based reward models were made by starting with a SFT checkpoint of V3, then finetuning on human preference data containing both last reward and chain-of-thought resulting in the final reward. Instability in Non-Reasoning Tasks: Lacking SFT knowledge for normal conversation, R1-Zero would produce valid solutions for math or code however be awkward on less complicated Q&A or safety prompts. 7b-2: This model takes the steps and schema definition, translating them into corresponding SQL code. Deepseek-coder: When the massive language model meets programming - the rise of code intelligence. DeepSeek: Built specifically for coding, offering high-quality and exact code generation-however it’s slower compared to different fashions.

???? Developer’s Playground - Follow our step-by-step information to see how deepseek-coder revolutionizes coding, debugging, and integration. Beating GPT models at coding, program synthesis. It is based on the GPT (Generative Pre-skilled Transformer) structure. DeepSeek R1 will be high quality-tuned on your data to create a model with higher response quality. DeepSeek-R1 is a state-of-the-art massive language model optimized with reinforcement learning and cold-begin knowledge for exceptional reasoning, math, and code performance. In so many phrases: the authors created a testing/verification harness around the mannequin which they exercised using reinforcement learning, and gently guided the model using easy Accuracy and Format rewards. Be it how-tos or the most recent happenings in AI, cybersecurity, personal devices, platforms like WhatsApp, Instagram, Facebook and extra; TOI Tech Desk brings the news with accuracy and authenticity. Evolution & Integration ✨ From Prototype to Powerhouse - Trace the journey from early models to the advanced DeepSeek AI, with each stage introducing new capabilities. As extra capabilities and instruments go browsing, organizations are required to prioritize interoperability as they look to leverage the most recent advancements in the sphere and discontinue outdated instruments.

???? Developer’s Playground - Follow our step-by-step information to see how deepseek-coder revolutionizes coding, debugging, and integration. Beating GPT models at coding, program synthesis. It is based on the GPT (Generative Pre-skilled Transformer) structure. DeepSeek R1 will be high quality-tuned on your data to create a model with higher response quality. DeepSeek-R1 is a state-of-the-art massive language model optimized with reinforcement learning and cold-begin knowledge for exceptional reasoning, math, and code performance. In so many phrases: the authors created a testing/verification harness around the mannequin which they exercised using reinforcement learning, and gently guided the model using easy Accuracy and Format rewards. Be it how-tos or the most recent happenings in AI, cybersecurity, personal devices, platforms like WhatsApp, Instagram, Facebook and extra; TOI Tech Desk brings the news with accuracy and authenticity. Evolution & Integration ✨ From Prototype to Powerhouse - Trace the journey from early models to the advanced DeepSeek AI, with each stage introducing new capabilities. As extra capabilities and instruments go browsing, organizations are required to prioritize interoperability as they look to leverage the most recent advancements in the sphere and discontinue outdated instruments.

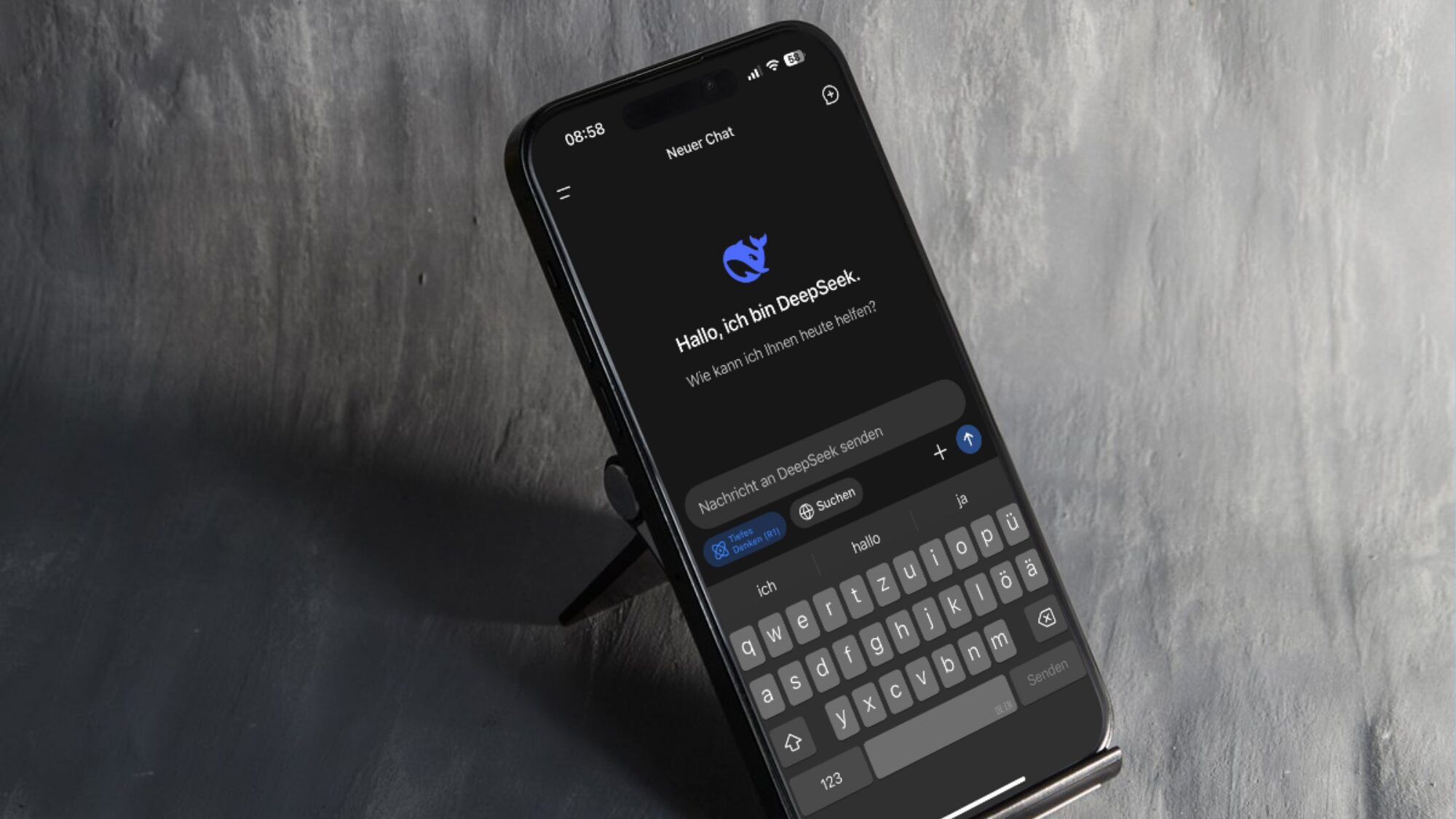

PNTR is a authorized designation used by the United States to classify those nations which might be topic to preferential tariff remedy. Released on 10 January, DeepSeek-R1 surpassed ChatGPT as the most downloaded freeware app on the iOS App Store in the United States by 27 January. Install PocketPal AI from the Google Play Store or App Store. On 27 January 2025, DeepSeek restricted its new person registration to phone numbers from mainland China, e-mail addresses, or Google account logins, after a "massive-scale" cyberattack disrupted the proper functioning of its servers. 28 January 2025, a total of $1 trillion of value was wiped off American stocks. For too long, AI has been seen as a recreation of scale-the place larger fashions meant better outcomes. Until now, many assumed that training chopping-edge fashions required over $1 billion and 1000's of the latest chips. The time for hype is over. I've just pointed that Vite may not all the time be reliable, primarily based by myself experience, and backed with a GitHub situation with over 400 likes.

For example, the artificial nature of the API updates might not fully seize the complexities of real-world code library modifications. OpenAI said that DeepSeek might have "inappropriately" used outputs from their model as training data in a course of known as distillation. Readability Problems: Because it by no means noticed any human-curated language style, its outputs had been typically jumbled or mix a number of languages. This method combines pure language reasoning with program-based mostly problem-solving. Hence, the authors concluded that whereas "pure RL" yields sturdy reasoning in verifiable tasks, the model’s general person-friendliness was lacking. The Cerebras Wafer Scale Engine (WSE-3), which is 50x bigger than conventional GPUs like Nvidia’s H100, demonstrates comparable or better yields through revolutionary defect tolerance strategies. Deepseek Online chat workforce has demonstrated that the reasoning patterns of bigger models might be distilled into smaller models, resulting in higher performance compared to the reasoning patterns found by RL on small fashions. The evaluation results display that the distilled smaller dense fashions perform exceptionally well on benchmarks. Limited Domain: Rule-primarily based rewards labored well for verifiable duties (math/coding), however handling creative/writing tasks demanded broader protection. 18% drop in Nvidia’s share worth. DeepSeek-AI (2024a) DeepSeek-AI. Deepseek-coder-v2: Breaking the barrier of closed-supply models in code intelligence. But China’s breakthrough raises a bigger query: Who will shape the future of synthetic intelligence?

For example, the artificial nature of the API updates might not fully seize the complexities of real-world code library modifications. OpenAI said that DeepSeek might have "inappropriately" used outputs from their model as training data in a course of known as distillation. Readability Problems: Because it by no means noticed any human-curated language style, its outputs had been typically jumbled or mix a number of languages. This method combines pure language reasoning with program-based mostly problem-solving. Hence, the authors concluded that whereas "pure RL" yields sturdy reasoning in verifiable tasks, the model’s general person-friendliness was lacking. The Cerebras Wafer Scale Engine (WSE-3), which is 50x bigger than conventional GPUs like Nvidia’s H100, demonstrates comparable or better yields through revolutionary defect tolerance strategies. Deepseek Online chat workforce has demonstrated that the reasoning patterns of bigger models might be distilled into smaller models, resulting in higher performance compared to the reasoning patterns found by RL on small fashions. The evaluation results display that the distilled smaller dense fashions perform exceptionally well on benchmarks. Limited Domain: Rule-primarily based rewards labored well for verifiable duties (math/coding), however handling creative/writing tasks demanded broader protection. 18% drop in Nvidia’s share worth. DeepSeek-AI (2024a) DeepSeek-AI. Deepseek-coder-v2: Breaking the barrier of closed-supply models in code intelligence. But China’s breakthrough raises a bigger query: Who will shape the future of synthetic intelligence?

- 이전글What's Fallacious With Deepseek China Ai 25.03.21

- 다음글If you wish to Be A Winner, Change Your Deepseek Chatgpt Philosophy Now! 25.03.21

댓글목록

등록된 댓글이 없습니다.